How to Succeed with a Design System

A design system can be an incredible boon to an organization. It helps codify an organization’s brand and voice and empowers folks outside design and engineering to create awesome experiences. We’ve previously outlined three common characteristics for a successful design system. However, for a design system to succeed beyond its initial launch, it needs two additional critical components:

A product owner that champions the design system in the organization and ensures feature development and maintenance.

It needs to learn from and use the lessons of traditional software engineering.

Let’s dive in a little deeper on the second point.

A Design System is Software

Treating a design system like any other software project means you can and should incorporate things like issue tracking, Sprint planning (have Sprints for that matter), continuous integration, and of course automated testing.

It’s easy to get into a debate around Agile, but the specific ceremonies aren’t as important as the mindset shift: how do we treat a design system like software? Specifically, how do we perform automated testing for a design system?

Testing

In our 2019 Design Systems Survey, only 24% of respondents said they implement automated testing on a design system. This number is far too low given that the more successful a design system is, the more places it’s used. Therefore, a bug in a design system can have far-reaching effects unlike bugs in other systems.

A design system is really a collection of modules that can be composed in a multitude of ways. In order to have confidence that changes made to one component don’t create a bug in another, we need testing of individual components and testing of groups of components.

The Design System Testing Pyramid

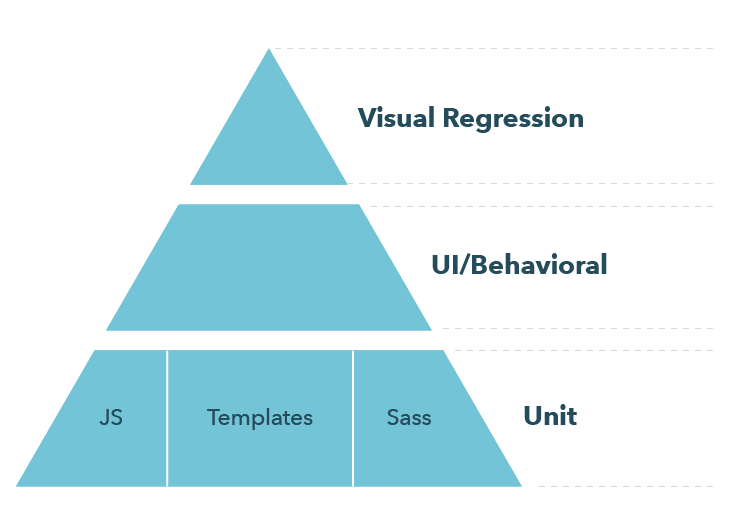

We often use a testing pyramid to explain the various types of tests and their expected roles and outcomes. We can use the testing pyramid visual for design system testing as well!

The Design System Testing Pyramid represents our ideal test suite. The wider the layer, the more tests you’ll have. And the higher on the pyramid, the more value and cost associated with the tests. Value refers to the confidence a test provides in the design system, and cost refers to the time it takes to run a test and the rigidity it applies to your design system.

For example, a single visual regression test can tell you that an entire collection of visual components can be composed into a page and their interactions are working as expected. Running this test is time-consuming, but it gives you a lot of confidence in the overall design system in a single run. At the same time, that same test can hamstring future changes you want to make in components, how they integrate, etc.

On the other end of our pyramid, a Sass mixin or JavaScript function may have 2–3 focused unit tests that are each very fast but only give us narrow confidence around the Sass mixin, template, or JavaScript function. The cost of these tests is relatively low because they tend to be fast. We can change, remove, or replace the code and tests without broad impact.

For these reasons, it’s important to constantly be evaluating the shape of our test suite to find a strong balance between cost and value at different levels of the pyramid.

The first level of our pyramid encompasses visual regression tests, followed by UI/behavioral tests, and all are supported by unit tests. Stay tuned as we release a series of articles focusing on the benefits and best practices of each level of this Design System Testing Pyramid.

Continuous Integration

Just like with other projects, we can leverage our favorite JavaScript testing tools like Mocha and Chai for unit testing (even our Sass!) and some integration testing, and we can use things like Backstop JS, Cypress, or Percy for full integration/regression testing. The aim of these tools doesn’t change: provide confidence about changes and feedback when things break.

Now, these tools are great for you to run locally and get feedback about the health of the component in question or the system in general, but they really shine in a continuous integration environment like CircleCI or Jenkins. Continuous integration opens up the possibility for PR review apps along with automated unit testing for every PR. A solid continuous integration setup gives additional confidence that new releases don’t introduce new bugs. Surfacing this type of feedback right in line with pull requests and code reviews has the exact same benefits for a design system as it does for a “traditional” software project.

Workflow Improvements

There’s another benefit to embracing automated testing for design systems that might not be immediately obvious: better feedback loops for local development. Instead of scaffolding up the entire system, having a test-first approach allows you to work on a new component in isolation with tests driving the feedback process.

On the Shoulders of Giants

The software world has decades of experience in managing the growth and creation of systems. Not all of that effort and experience has actually worked, but those failures are also valuable. By treating a design system like another software project, you give it a greater chance of success.

We have shared more thoughts on automated testing and design systems on The Foundry. Learn more about our experiences in visual regression testing, accessibility testing, and testing components and behaviors

Sparkbox’s Development Capabilities Assessment

Struggle to deliver quality software sustainably for the business? Give your development organization research-backed direction on improving practices. Simply answer a few questions to generate a customized, confidential report addressing your challenges.