We’ve evaluated automated accessibility testing tools to help you determine which is best for your project. Learn the strengths and weaknesses of Google Lighthouse.

A wide range of tools can help you find accessibility issues, from browser plugins and website scanners to Javascript libraries that analyze your codebase. Each tool has its own strengths and weaknesses when it comes to catching accessibility issues. Sparkbox’s goal is to research a variety of accessibility tools and empower anyone to run accessibility audits. In this article, I will review Lighthouse from Google by using it on a testing site that has planned accessibility issues. This review will rate the plugin based on the following areas:

Lighthouse is a very diverse tool that can be set up and used in a variety of ways, which makes it a good option for both technical and non-technical users. In addition to the approaches listed below, Lighthouse accessibility audits can be included as part of a continuous integration pipeline. We aren’t ranking that setup in this article, but if you are interested, you should read Katy Bowman’s article An Introduction To Running Lighthouse Programmatically.

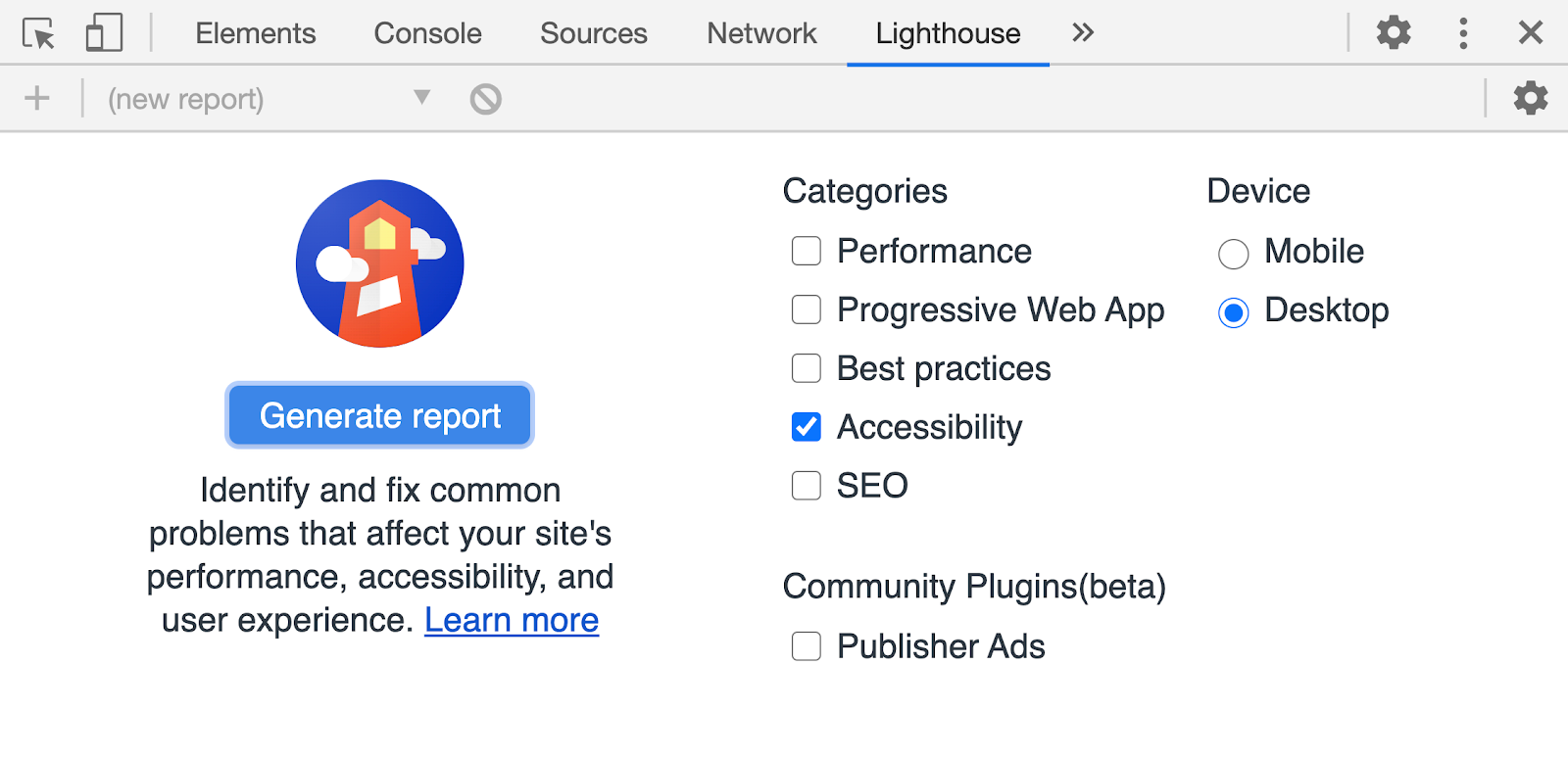

The Lighthouse setup we review in this article uses the Lighthouse tab in Chrome DevTools.

Watch a walkthrough of the tool and a short review or keep scrolling to read the full review. Jump to the conclusion if you are in a hurry.

YouTube embeds track user data for advertising purposes. You can watch the video on YouTube if you prefer not to grant consent for YouTube embeds.

In order to fully vet accessibility tools like this one, Sparkbox created a demo site with intentional errors. This site is not related to or connected with any live content or organization.

Ease of Setup: Outstanding (4/4)

Lighthouse is available automatically in Chrome, with no setup or extensions to install, and can be used to test both local sites and authenticated pages. Lighthouse can be accessed by opening Chrome Developer Tools and choosing the Lighthouse tab at the top.

Lighthouse is also available as a browser extension for Chrome and Firefox. Google advises against using Lighthouse this way since the extension does not allow for testing local sites and authenticated pages, which is something you can do in the DevTools. The extension also runs the full suite of audits without allowing the user to just choose the ones they want. Unfortunately, this limits the user to only using Lighthouse in Chrome in many circumstances. Additionally, running Lighthouse on a multi-page web application could become tedious.

Ease of Use: Exceeds Expectations (3/4)

The Lighthouse tab in Chrome Devtools has a very straightforward interface that is easy to use. It allows you to choose to run just an accessibility audit or run additional audits, including Performance, Progressive Web App, Best practices, and SEO. It also allows the user to choose between running the audit on desktop or a simulated mobile device. Once those choices have been made, pressing Generate report will run the selected audit(s) for you directly in the browser.

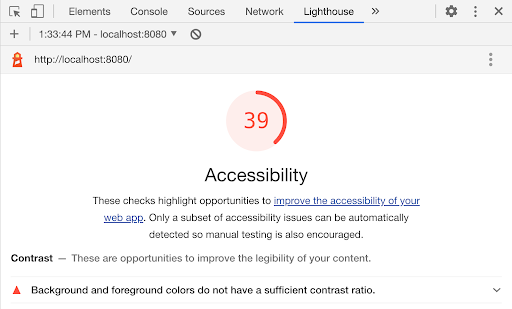

When just the Accessibility audit is run, the report is generated quickly (within just a few seconds) and is displayed directly in the Lighthouse tab in DevTools. The report includes a list of any accessibility issues that were found and which elements failed each accessibility test. Each issue also includes a brief description of the problem and links to more information regarding those issues in Google’s Lighthouse documentation. (See the Clarity of Feedback section of this article for more information.)

Unfortunately, the documentation for using Lighthouse was surprisingly out of date. It directs the user to the “Audits” tab in the DevTools, which is where the Lighthouse controls were previously located. Now, however, Lighthouse is available in its own tab. That’s something a user might figure out relatively quickly, but it’s disappointing that the documentation has not been properly maintained.

Lighthouse also has something called Lighthouse Viewer, which is intended to allow the user to share the accessibility audit by either downloading a JSON file from the audit page or by creating a GitHub gist with a URL that can be used in the viewer. Even in theory, this feels like a lot of extra steps to share the audit. And in practice, the Lighthouse Viewer documentation was either incomplete or out of date because it was not possible to share the audit using a gist by following the instructions in the documentation.

One disadvantage of using Lighthouse in the browser is that only one page can be audited at a time, which makes the process cumbersome for sites with a large number of pages. Audits must also be re-run manually if a page needs to be rechecked, such as when a bug is fixed. This is where running Lighthouse programmatically rather than in the browser has advantages.

Reputable Sources: Meets Expectations (2/4)

The most prominent feature of The Lighthouse accessibility audit report is the accessibility score, located at the top of the report. This score is a weighted average of all of the accessibility audits Lighthouse runs on the current page and is based on Deque’s axe user impact assessments (which Deque uses for it’s own axe browser extension). These user impact assessments are directly based on WCAG 2.0 and WCAG 2.1 for A and AA and are a pretty reliable resource.

However, despite being based on axe’s list, Lighthouse still missed issues that the axe extension was able to catch, so the implementation is not consistent. Also, compared to axe, Lighthouse doesn’t do as well letting the user know which WCAG rules are being violated. (Check out the section below on Clarity of Feedback for more details.)

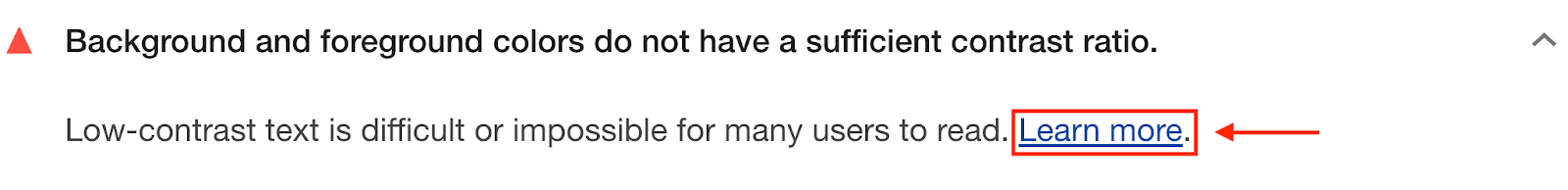

Lighthouse also loses some credibility due to an obvious accessibility failure directly in the audit report it generates. The links in the report that lead to more information about the individual accessibility issues all read “Learn More.” This is a poor accessibility practice that goes against WCAG 2.4.4 (Level A), which says that the purpose of a link should be able to be determined from the link text alone or from its programmatically determined link context. We’d like to see a tool dedicated to accessibility have more attention to accessibility issues in its own interface.

Accuracy of Feedback: Meets Expectations (2/4)

Lighthouse caught many, but not all, of the computer-discoverable accessibility issues that were built into the site. It failed to catch that the target click area was less than 44px x 44px; that there were duplicate id attributes; that there was duplicate link text; and that there was a landmark inside of a landmark.

A few issues are interesting to note. First, while we considered a missing skip-to-content link to be something that an automated tool should catch, it appears that Lighthouse still passed the site in this area because there were headings and landmarks present. Depending on the screen reader mode being used, this would effectively give the user other ways to skip the navigation without a skip-to-content link.

Secondly, our NASA test site has duplicate id’s (specifically the headings on the astronaut bios). According to Lighthouse, these duplicate id’s passed in terms of accessibility because the elements with the duplicate ids were not focusable.

Interestingly, although we would not expect an illogical tab order to be computer-discoverable (a human should determine if the tab order makes sense), Lighthouse did catch the fact that one of the elements on the site had a tabindex value greater than 1, which would interfere with the expected tab order.

Lighthouse did catch one issue that we did not have on our list, which was that one of our links (the one that directs a user to UnSplash.com) had no discernable text.

Clarity of Feedback: Meets Expectations (2/4)

The Lighthouse accessibility audit generates a list of all accessibility issues that it detects and an overall accessibility score, which ranks the accessibility of the page on a scale of 0–100. Lighthouse does have documentation on how the accessibility score is calculated, but that information is not immediately available within DevTools or the report itself, and it would be helpful if that information was made more readily available to users.

Following the accessibility score is an accordion-style list of the accessibility issues found by Lighthouse. Each section includes a brief description of the issue and presents a list of elements that fail that particular audit. Each section includes a link to the Lighthouse documentation that further explains the accessibility issue and provides some suggestions on how to resolve it.

The report had some features that make it easy to determine which elements are failing the check. Hovering over any of the element tags listed under an issue will highlight the offending element in the browser window so the user can visually identify which one is failing. Clicking on those elements takes the user to the exact line of code (in the HTML of the Elements tab in DevTools.) where the problematic element exists. This can be useful for troubleshooting or preparing to log an accessibility bug. Unfortunately, it’s not initially obvious that these features are available, and I talked to several users who weren’t aware that those features existed.

I also found the documentation for each of the issues to be pretty inconsistent. Some, like “Background and foreground colors do not have a sufficient contrast ratio,” directly link to the specific WCAG 2.1 success criteria used to evaluate the issue. Others, like “Document doesn’t have a ,” don’t reference WCAG specifications at all. For the most part, the documentation relies on educating the user by sending them to Deque, WebAIM, or other external resources rather than providing the user with the information they need in one easy-to-find location.

What I do like about Lighthouse’s report is that, in addition to showing the audits that failed and the issues causing them, it does show the user which audits passed and which audits were not applicable to their site. This can help the user understand more about accessibility on their site.

I also really appreciate the “Additional Items to Manually Check” section. At Sparkbox, we recognize that automated testing is only a first step in making sure that a site is accessible. There are numerous accessibility issues that can only be accurately tested by a human, and I was happy to see Lighthouse reinforcing that idea by including this section, which suggests additional accessibility tests that should be conducted manually.

Cost: Outstanding (4/4)

Lighthouse is free to use in all of its forms. Of course, it is a Google product, and Google is known to collect all kinds of information on its users, so be aware of that if it is something that concerns you.

Strengths and Limitations: Exceeds Expectations (3/4)

While I think Lighthouse in DevTools has a lot of room for improvement, both in its implementation and in its documentation, the ability to run audits on a variety of metrics all in one place is a definite strength. While it involves a more complicated setup, running Lighthouse programmatically would solve many of the challenges that a user encounters in the DevTools version.

Conclusion: 👍 Recommend (21/28)

Lighthouse in DevTools has a lot of potential as an auditing tool, but it feels underdeveloped for something that comes from a company with such a large budget. However, for a user who is interested in doing an accessibility audit and doesn’t need to get into the details (like WCAG specifications), this is still a good way to catch a lot of accessibility issues. There are other tools we would recommend above Lighthouse if you are looking for an accessibility audit that can be run in the browser. That being said, running Lighthouse programmatically, rather than within the browser, has many additional advantages that would sway me to recommend using that implementation instead.

Accessibility Issues Found

| Test Site Issue | Tools Should Find | People Should Find | Lighthouse Found |

|---|---|---|---|

| Insufficient contrast in large text | ✅ | — | ✅ |

| Insufficient contrast in small text | ✅ | — | ✅ |

| Form labels not associated with inputs | ✅ | — | ✅ |

| Missing alt attribute | ✅ | — | ✅ |

| Missing lang attribute on html element | ✅ | — | ✅ |

| Missing title element | ✅ | — | ✅ |

| Landmark inside of a landmark | ✅ | — | ❌ |

| Heading hierarchy is incorrect | ✅ | — | ✅ |

| Unorder list missing containing ul tag | ✅ | — | ✅ |

| ID attributes with the same name | ✅ | — | ❌ |

| Target for click area is less than 44px by 44px | ✅ | — | ❌ |

| Duplicate link text (lots of “Click Here”s or “Learn More”s) | ✅ | — | ❌ |

| div soup | ✅ | — | ❌ |

| Funky tab order | — | ✅ | ✅ |

| Missing skip-to-content link | — | ✅ | ❌ |

| Using alt text on decorative images that don’t need it | — | ✅ | ❌ |

| Alt text with unhelpful text, not relevant, or no text when needed | — | ✅ | ❌ |

| Page title is not relative to the content on the page (missing) | — | ✅ | ❌ |

| Has technical jargon | — | ✅ | ❌ |

| Using only color to show error and success messages | — | ✅ | ❌ |

| Removed focus (either on certain objects or the entire site) | — | ✅ | ❌ |

| Form helper text not associated with inputs | — | ✅ | ❌ |

| Pop-up that doesn’t close when you tab through it | — | ✅ | ❌ |