We’ve evaluated automated accessibility testing tools to help you determine which is best for your project. Learn the strengths and weaknesses of the IBM Equal Access browser extension.

A wide range of tools can help you find accessibility issues, from browser extensions and website scanners to JavaScript libraries that analyze your codebase. Each tool has its own strengths and weaknesses when it comes to catching accessibility issues. Sparkbox’s goal is to research a variety of accessibility tools and empower anyone to run accessibility audits. In this article, I will review the IBM Equal Access Accessibility Checker browser extension by using it on a testing site that has planned accessibility issues. This review will rate the extension based on the following areas:

Watch a walkthrough of the tool and a short review or keep scrolling to read the full review. Jump to the conclusion if you are in a hurry.

YouTube embeds track user data for advertising purposes. You can watch the video on YouTube if you prefer not to grant consent for YouTube embeds.

In order to fully vet accessibility tools like this one, Sparkbox created a demo site with intentional errors. This site is not related to or connected with any live content or organization.

Ease of Setup - Outstanding (4/4)

The IBM browser extension is available for Chrome, Edge, and Firefox, and it takes less than a minute to install. Once you’ve added it to your browser, you can open the developer tools and navigate to the “Accessibility Assessment” tab to start scanning pages for issues.

I will note that there is some naming inconsistency with the extension that is a little confusing. The IBM Equal Access Toolkit is where documentation related to the rules used by the extension and other tools, the browser extension is called “IBM Equal Access Accessibility Checker”, and in developer tools, the extension’s tab is called “Accessibility Assessment”. If I were to ask another developer to use the extension to check something, I’d likely end up asking them to use “IBM’s thing” and send them a link rather than try to pick the right name.

Ease of Use - Exceeds Expectations (3/4)

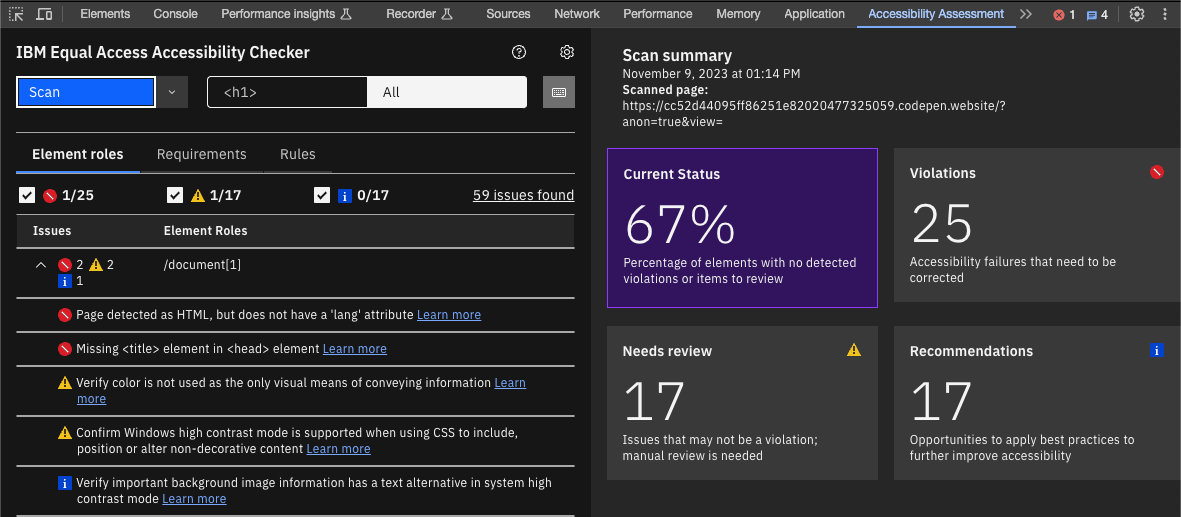

Overall, the extension is straightforward and intuitive to use. Once the developer tools are open and you’re in the “Accessibility Assessment” tab, you can click “Scan” to analyze the current page. This generates a list of issues that are marked as “Violation”, “Needs review”, or “Recommendation”. There are three tabs that organize the issues differently:

Element roles: sorted by where the issue occurs in the page

Requirements: sorted by WCAG 2.2 success criteria

Rules: sorted by severity with a description of the IBM rule check that failed

There is also a focus mode, which filters out issues unrelated to a selected element, which may be useful for inspecting a complicated widget or if a page has many issues.

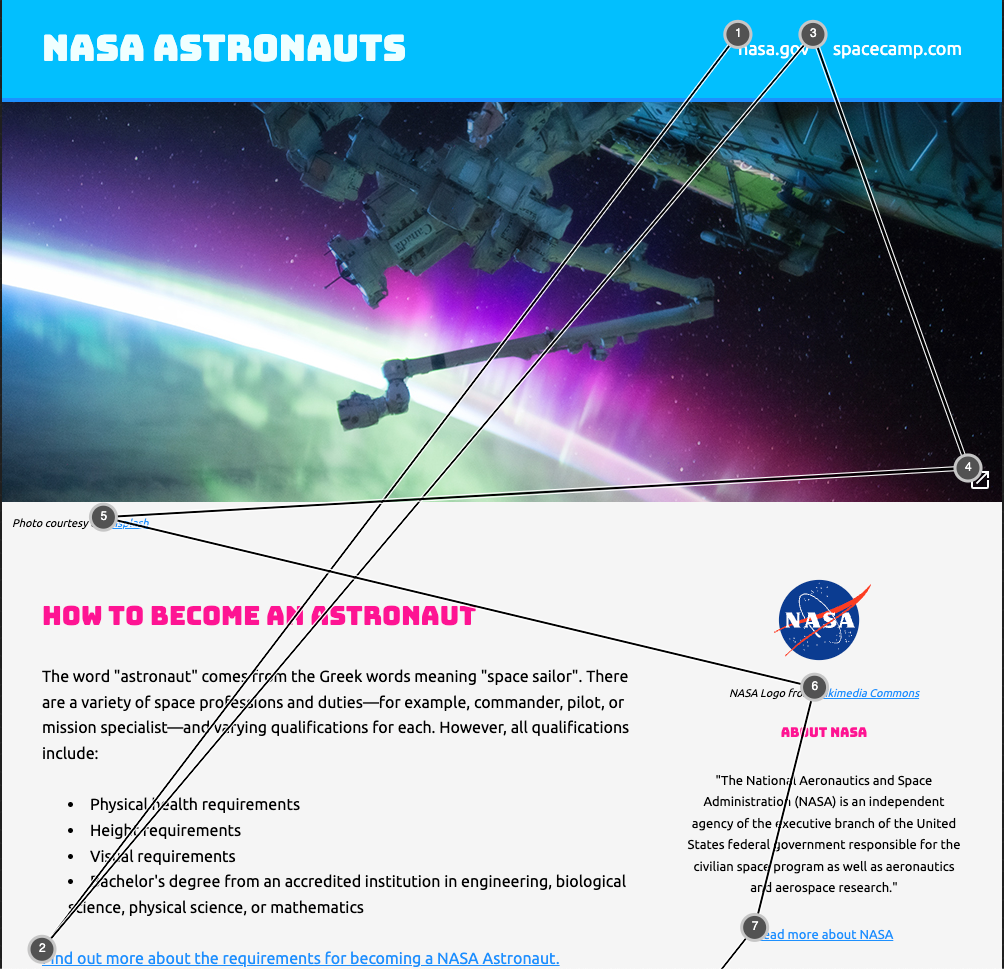

There is a keyboard checker mode that visualizes the tab order of a page and highlights potential issues with focusable elements. This is useful for quickly checking whether the tab order makes sense, but it is not a substitute for manual keyboard-only testing, since it may not always be accurate. Simple cases where most focusable items are links, buttons, or form controls seem to work reliably enough, but more complex features like <video>, <audio>, or <iframe> elements may not be reflected in the tab order visualization.

The extension also allows you to scan multiple pages and download the results into a spreadsheet that summarizes the findings overall as well as broken down by page. To do this, you select “Start storing scans” from the dropdown next to the “Scan” button, then scan whichever pages you want. Then, from the same dropdown, you can choose to download the stored scans in an Excel formatted spreadsheet (.xlsx). Be careful about closing developer tools while scanning multiple pages, however. The stored scans are deleted automatically when the browser or developer tools are closed.

There are a few usability problems worth noting. One is that when you click on an issue, the element in question is highlighted in the browser, but this is done by placing a styled <div> over the element, which makes it more difficult to inspect the element to see its markup. There also doesn’t seem to be a way to remove the focus highlighting other than clicking on a different issue or reloading the page.

Also, the element descriptions are hard to parse, since they’re specified by where they are in the document like “/document[1]/main[1]/list[1]/listitem[7]/paragraph[1]”. This isn’t a massive problem if you’re using the extension, since you can click on the issue to highlight the element, but if you’re reviewing a spreadsheet, it’s fairly inscrutable.

Another usability problem is the use of “Learn more” links within the descriptions of issues. These types of links are well understood to be a problem for users with a variety of disabilities, so it’s disappointing to see this pattern used in a tool for evaluating accessibility, especially since people with disabilities are an important segment of the community doing accessibility work on codebases.

Reputable Sources - Outstanding (4/4)

IBM uses WCAG 2.2 as the basis for their rules, with a focus on Level A and Level AA success criteria, but they also include rules based on Section 508 and other international accessibility standards, like the European EN 301 549 standard (PDF). Documentation mostly still references WCAG 2.1, but that’s to be expected given how recently WCAG 2.2 was released, and those documents are still authoritative due to the additive nature of WCAG versions.

The full list of requirements that IBM uses are listed on the Equal Access Toolkit website, and the information panel in the extension links to IBM’s documentation for the requirement along with other relevant reputable sources. One nitpick is that you need to go through IBM’s documentation first before finding the link to a WCAG page like Understanding Success Criterion 1.4.1. IBM’s documentation does a good job of summarizing the admittedly dense and hard-to-understand WCAG documentation, but it would still be nice to have those links surfaced in the extension itself.

Accuracy of Feedback - Meets Expectations (2/4)

Before diving into the evaluation, we have to recognize our own limitations as reviewers. The testing rubric that we use for these reviews is three years old at this point, so it does not account for new success criteria from WCAG 2.2, and it also makes some assumptions about what automated tools can catch that may need to be reevaluated. There are also some Level AAA criteria that we’re testing for that automated tools don’t necessarily look for (even WCAG doesn’t recommend uniform AAA compliance).

The browser extension caught most of the issues on our test page that we would expect an automated tool to find, but it also missed a few. The Firefox and Chrome extensions also returned different results, with the Firefox extension missing an issue for a link without an accessible name that the Chrome extension caught.

The rules appear to be fairly strict, resulting in a few blanket warnings and at least one false positive. However, it also found issues that other comparable tools missed and flagged a few issues that need manual review based on content more so than code.

Since the extension and the underlying rules are open source, you can submit issues in the Github repo to report bugs, missed issues, and false positives.

To see all the test site issues that IBM Equal Access Accessibility Checker found or missed, skip to Accessibility Issues Found.

Clarity of Feedback - Exceeds Expectations (3/4)

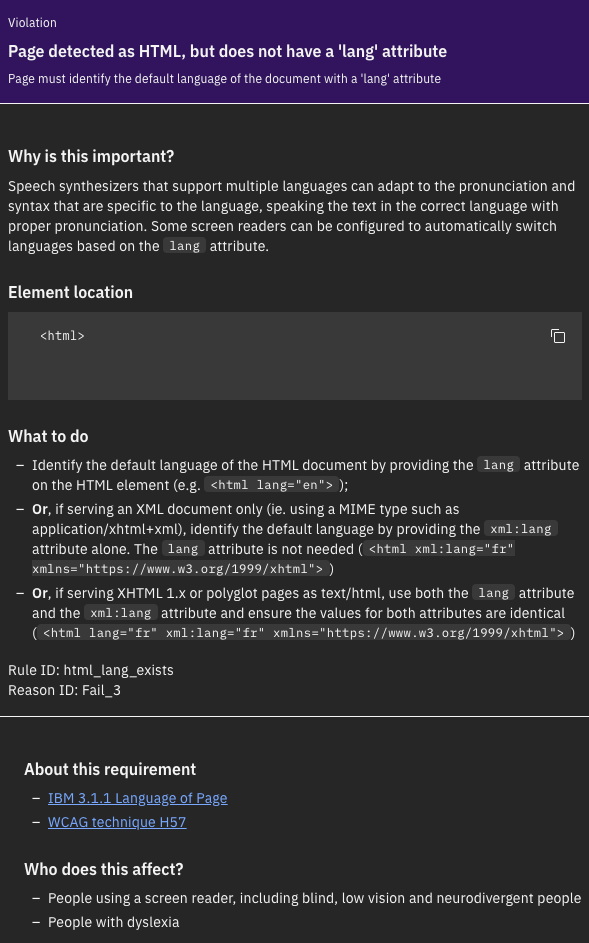

When you click on an issue, an information panel will update with relevant details about the issue, including important context about why it’s an issue and what to do about it. This is great for educating people who may not be well-versed in accessibility, as well as for making the case to stakeholders about why issues need to be fixed. For example, a Product Champion who doesn’t have deep technical knowledge could still use feedback from the extension to help prioritize work.

Issues are categorized as “Violation,” “Needs review,” or “Recommendation” with icons that imply a correlation to the severity of the issue. However, an issue that needs to be manually reviewed could be more of a barrier to accessibility than some violations. For example, insufficient color contrast is arguably more important to fix than a duplicate ID on an element, but the former would be flagged for review and the latter would be a violation.

IBM’s documentation describes a pace of completion process to address issues in order of impact, where Level 1 tasks are most essential and Level 3 tasks are lower priority but still necessary for compliance. However, that type of hierarchy isn’t reflected in the extension, which could lead to de-prioritizing important fixes. That hierarchy does show up in the spreadsheet reports, but if you’re only using the extension to scan pages, you’ll miss that important context.

Cost - Outstanding (4/4)

The extension is free to use in supported browsers, and it does not have any premium add-ons or paid features to unlock functionality. There are also several packages for Cypress, Karma, and Node.js that could be used in workflows for automated accessibility testing and monitoring, but we did not evaluate those tools for this review. The repo is also open source under the Apache-2.0 license.

Strengths and Limitations - Outstanding (4/4)

The extension is easy to use for quick audits, and it catches a similar amount of issues compared to other best-in-class tools, even surfacing some content issues that we wouldn’t expect an automated tool to detect. The tooling and rules are open source and maintained by IBM, which will help make improvements over time.

The “Keyboard Checker Mode” is great for visualizing the tab order of a page, although you will still need to do manual keyboard testing. After all, not all accessibility issues can be automatically detected.

The feature that sets this tool apart the most is the ability to scan multiple pages and download the results into a report. This is massively helpful for performing accessibility audits, since you can quickly scan a representative sample of pages for a site and let the tool aggregate and summarize the results for you instead of having to do it manually.

Conclusion - 🏆 Highly Recommend (24/28)

The IBM Equal Access Accessibility Checker browser extension is an excellent tool to add to your accessibility testing toolkit. With this tool’s ability to scan multiple pages, this would be a great first step in an accessibility audit to create a report, then add to it with test results from other automated tools and manual testing, following the Swiss cheese model. The single-page scan feature and keyboard checker are also great on their own, so this is definitely worth incorporating into a regular development workflow as well.

This is the first tool in this series that we’ve reviewed that is competitive with the best-in-class axe DevTools. We already have team members using this extension in conjunction with axe to evaluate client work, and we’re highly likely to recommend incorporating this tool into our team’s standard development process.

Accessibility Issues Found

| Test Site Issue | Tools Should Find | People Should Find | IBM Equal Access Accessibility Checker Found |

|---|---|---|---|

| Insufficient contrast in large text | ✅ | — | ✅ |

| Insufficient contrast in small text | ✅ | — | ✅ |

| Form labels not associated with inputs | ✅ | — | ✅ |

| Missing alt attribute | ✅ | — | ✅ |

| Missing lang attribute on html element | ✅ | — | ✅ |

| Missing title element | ✅ | — | ✅ |

| Landmark inside of a landmark | ✅ | — | ❌ |

| Heading hierarchy is incorrect | ✅ | — | ❌ |

| Unorder list missing containing ul tag | ✅ | — | ✅ |

| ID attributes with the same name | ✅ | — | ✅ |

| Target for click area is less than 44px by 44px | ✅ | — | ❌ |

| Duplicate link text (lots of “Click Here”s or “Learn More”s) | ✅ | — | ❌ |

| div soup | ✅ | — | ✅ |

| Funky tab order | — | ✅ | ✅ |

| Missing skip-to-content link | — | ✅ | ❌ |

| Using alt text on decorative images that don’t need it | — | ✅ | ❌ |

| Alt text with unhelpful text, not relevant, or no text when needed | — | ✅ | ❌ |

| Page title is not relative to the content on the page (missing) | — | ✅ | ❌ |

| Has technical jargon | — | ✅ | ❌ |

| Using only color to show error and success messages | — | ✅ | ✅ |

| Removed focus (either on certain objects or the entire site) | — | ✅ | ✅ |

| Form helper text not associated with inputs | — | ✅ | ❌ |

| Pop-up that doesn’t close when you tab through it | — | ✅ | ❌ |